Output¶

Testplan provides comprehensive test output styles made available from the built-in test report exporters. These are the following:

Console¶

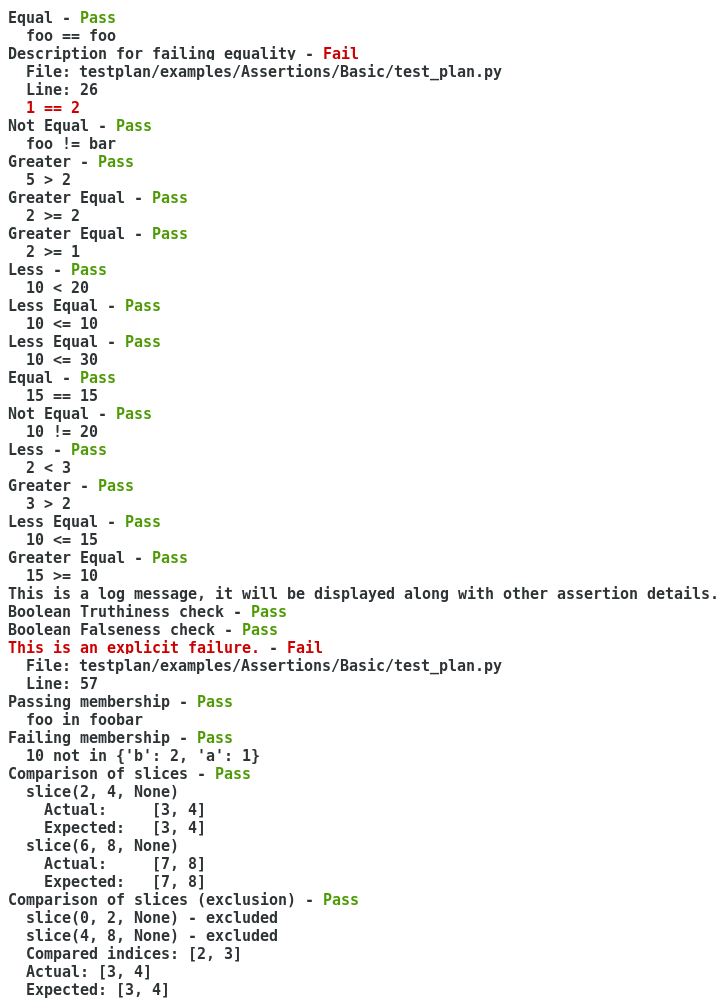

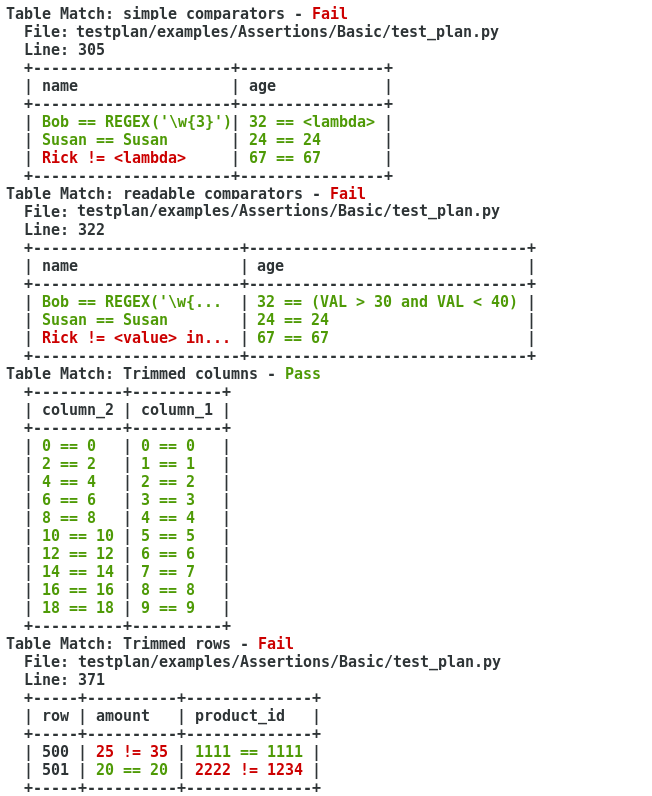

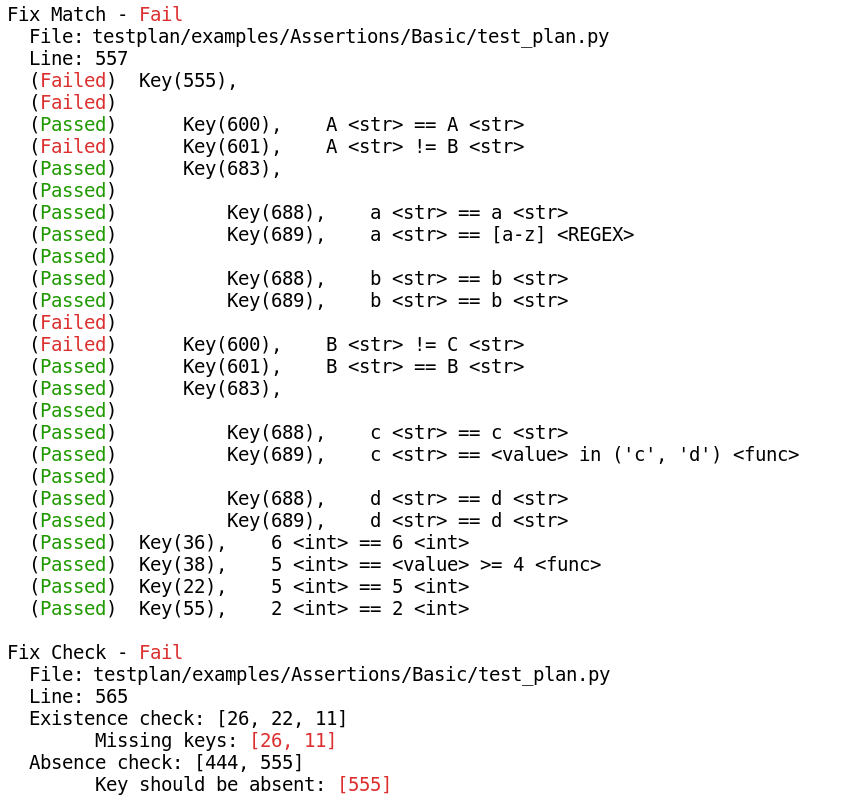

Based on the verbosity specified with command line options or programmatically (see the downloadable example that demonstrates that), the assertions/testcases/testsuites/Multitest level results will be printed on the console:

Basic assertions verbose output:

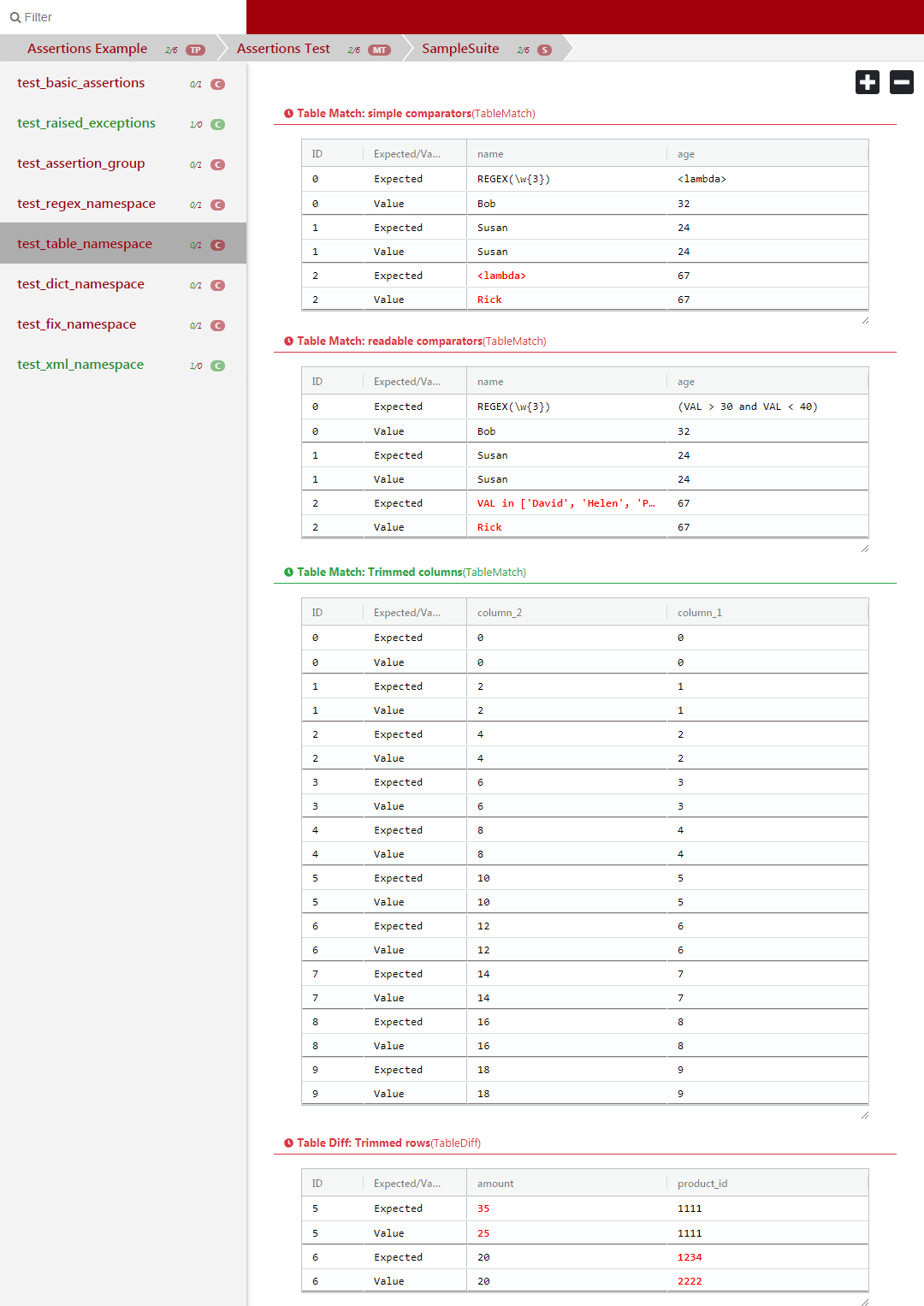

Table assertions verbose output:

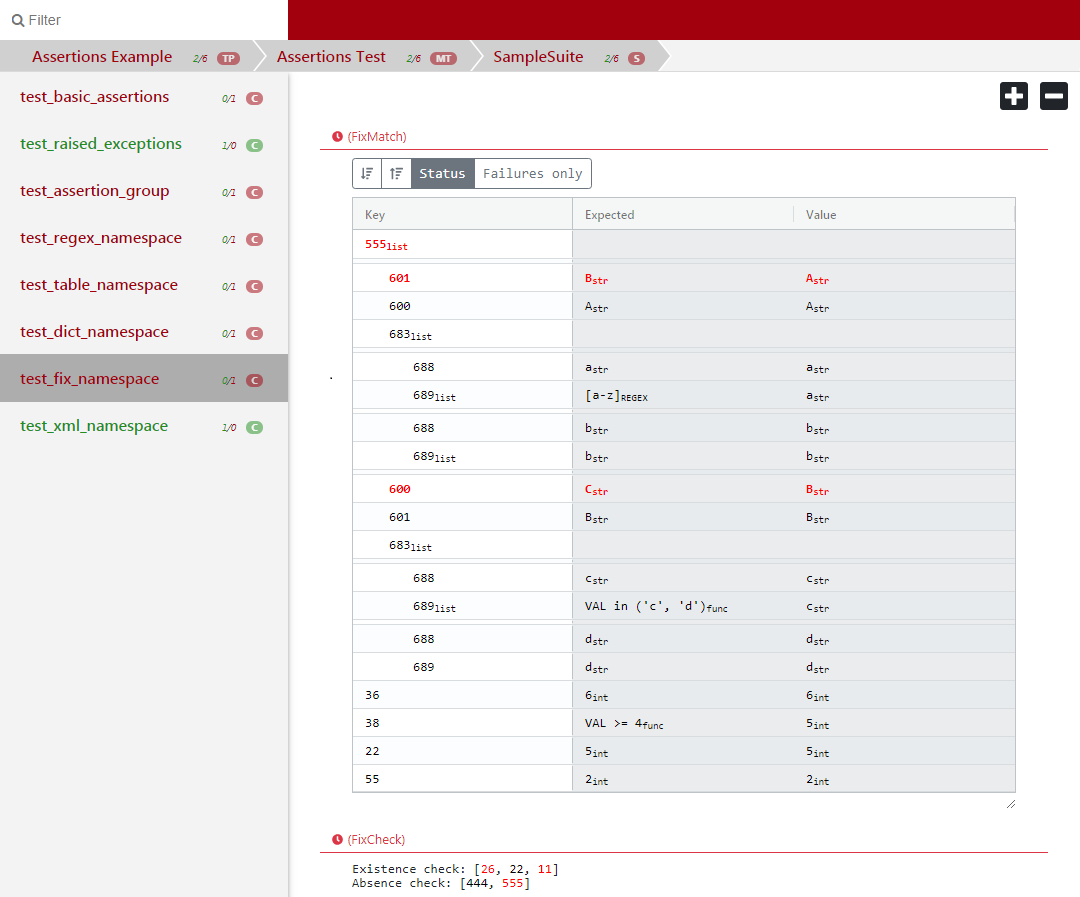

Fix/Dict match assertions verbose output:

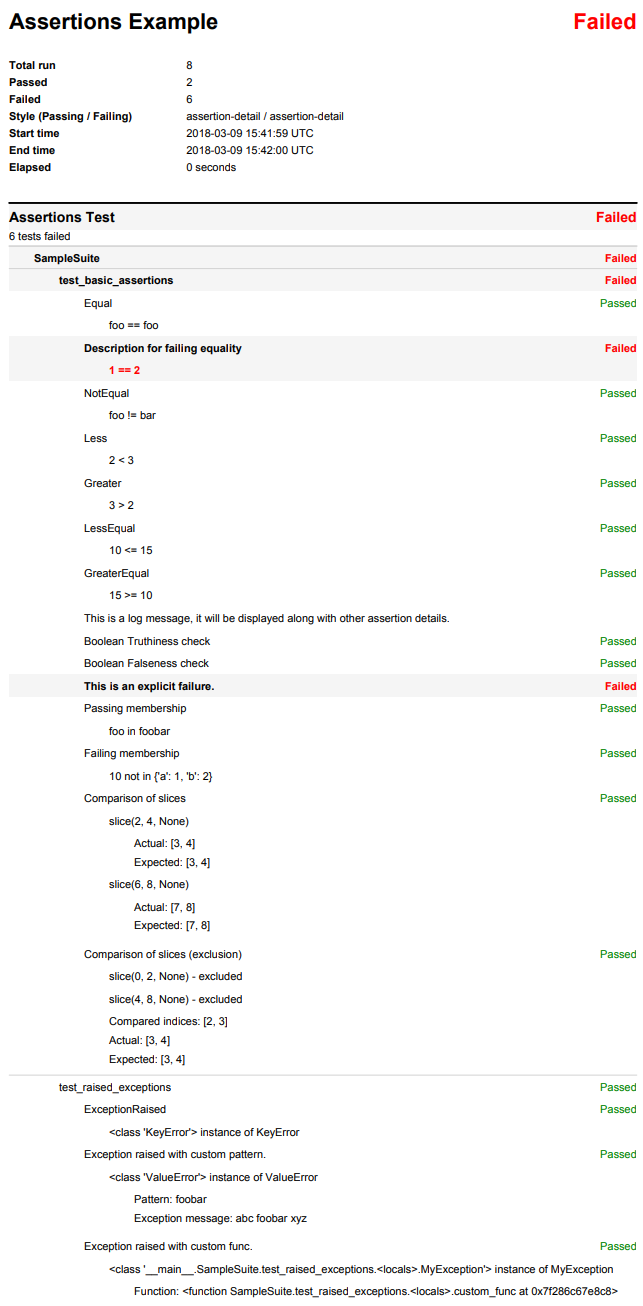

PDF¶

PDF output concept is similar to console but it is used when persistent test evidence are needed. PDF reports can be generated by either command line options, or be done programmatically with configuration options of Testplan.

Examples for PDF report generation can be seen here.

Basic assertions PDF representation:

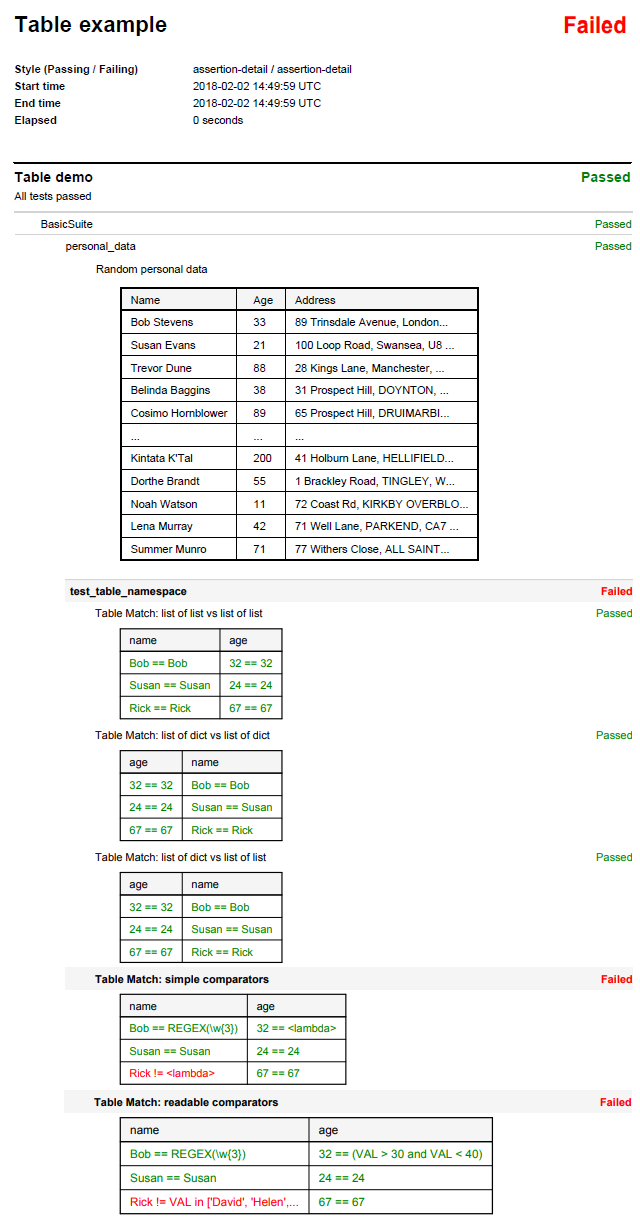

Table assertions PDF representation:

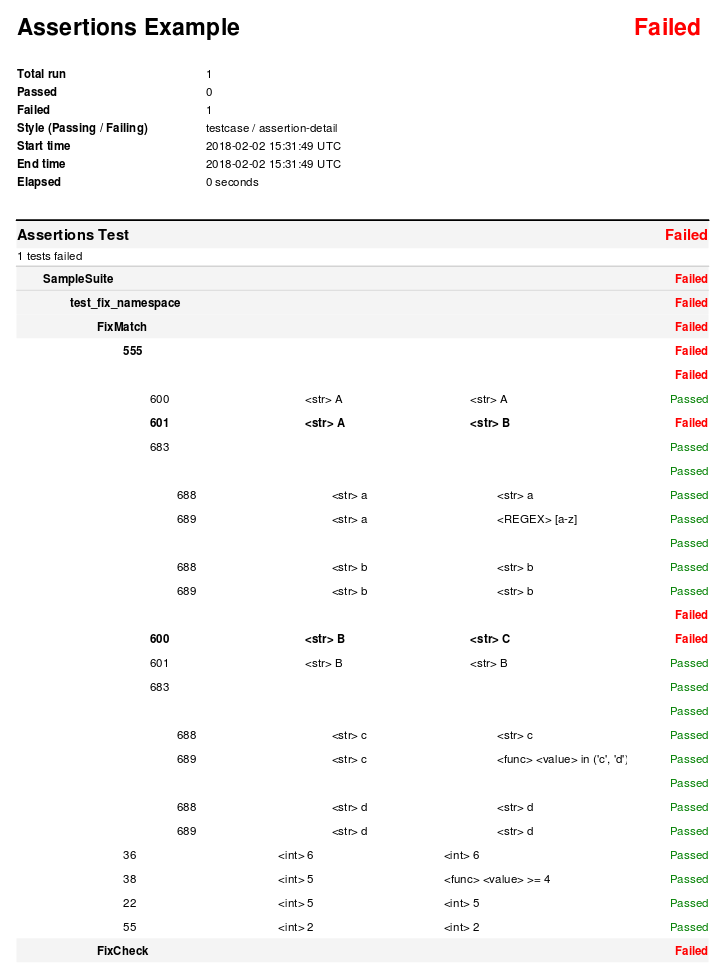

Fix/Dict match assertions PDF representation:

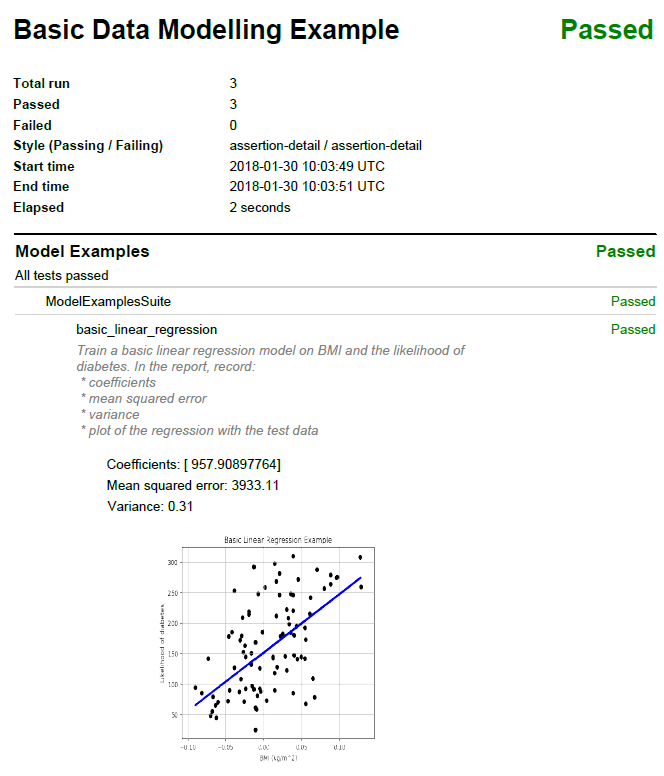

Matplot assertions PDF representation:

Testplan uses Reportlab to generate

test reports in PDF format. The simplest way to enable this functionality is to

pass --pdf comand line argument:

$ ./test_plan.py --pdf my-report.pdf

[MultiTest] -> Pass

[Testplan] -> Pass

PDF generated at mypdf.pdf

It is also possible to use programmatic declaration for PDF report generation as well:

@test_plan(name='SamplePlan', pdf_path='my-report.pdf')

def main(plan):

# Testplan implicitly creates PDF exporter if we just pass `pdf_path`

...

A more explicit usage is to initialize a PDF exporter directly:

from testplan.exporters.testing import PDFExporter

@test_plan(

name='SamplePlan',

exporters=[

PDFExporter(pdf_path='my-report.pdf')

]

)

def main(plan):

...

PDF reports can contain different levels of detail, configured via styling options. These options can be specified:

via command line:

$ ./test_plan.py --pdf my-report.pdf --pdf-style extended-summaryprogrammatically:

from testplan.report.testing.styles import Style, StyleEnum @test_plan( name='SamplePlan', pdf_path='my-report.pdf', pdf_style=Style( passing=StyleEnum.ASSERTION, failing=StyleEnum.ASSERTION_DETAIL ) ) def main(plan): ...

Read more about output styles here.

Tag filtered PDFs¶

If a plan has a very large number of tests, it may be better to generate multiple PDF reports (filtered by tags), rather a single report.

Testplan provides such functionality via tag filtered PDF generation, which can

be enabled by --report-tags and --report-tags-all arguments:

Example tagger testsuite and testcase:

@testsuite(tags='alpha')

class SampleTestAlpha(object):

@testcase(tags='server')

def test_method_1(self, env, result):

...

@testcase(tags='database')

def test_method_2(self, env, result):

...

@testcase(tags=('server', 'client'))

def test_method_3(self, env, result):

...

The command below will generate 2 PDFs, first one will contain test results from

tests tagged with database, second one will contain the results from tests

tagged with server OR client

A new PDF will be generated for each --report-tags/--report-tags-all

argument.

$ ./test_plan.py --report-dir ./reports --report-tags database --report-tags server client

Equivalent programmatic declaration for the same reports would be:

@test_plan(

name='SamplePlan',

report_dir='reports'

report_tags=[

'database',

('server', 'client')

]

)

def main(plan):

# Testplan implicitly creates Tag Filtered PDF exporter if we pass

# the `report_tags` / `report_tags_all` arguments.

...

A more explicit usage is to initialize a Tag Filtered PDF exporter directly:

from testplan.exporters.testing import TagFilteredPDFExporter

@test_plan(

name='SamplePlan',

exporters=[

TagFiltered(

report_dir='reports',

report_tags=[

'database',

('server', 'client')

]

)

]

)

def main(plan):

...

Examples for Tag filtered PDF report generation can be seen here.

XML¶

XML output can be generated per each MultiTest in a plan, and can be used as an alternative format for persistent test evidence. Testplan supports XML exports compatible with the JUnit format:

<testsuites>

<testsuite tests="3" errors="0" name="AlphaSuite" package="Primary:AlphaSuite" hostname="hostname.example.com" failures="1" id="0">

<testcase classname="Primary:AlphaSuite:test_equality_passing" name="test_equality_passing" time="0.138505"/>

<testcase classname="Primary:AlphaSuite:test_equality_failing" name="test_equality_failing" time="0.001906">

<failure message="failing equality" type="assertion"/>

</testcase>

<testcase classname="Primary:AlphaSuite:test_membership_passing" name="test_membership_passing" time="0.00184"/>

<system-out/>

<system-err/>

</testsuite>

</testsuites>

To enable this functionality , use --xml and provide a directory for XML files.

It is possible to generate an XML file per each MultiTest in your plan.

$ ./test_plan.py --xml /path/to/xml-dir

It is also possible to use programmatic declaration for XML generation as well:

@test_plan(name='SamplePlan', xml_dir='/path/to/xml-dir')

def main(plan):

# Testplan implicitly creates XML exporter if we just pass `xml_dir`

...

A more explicit usage is to initialize a XML exporter directly.

from testplan.exporters.testing import XMLExporter

@test_plan(

name='SamplePlan',

exporters=[

XMLExporter(xml_dir='/path/to/xml-dir')

]

)

def main(plan):

...

Examples for XML report generation can be seen here.

JSON¶

A JSON file fully represents the test data. Testplan supports serialization / deserialization of test reports, meaning that report can be stored as a JSON file and then loaded back into memory to generate other kinds of output (e.g. PDF, XML or any custom export target).

Sample JSON output:

{"entries": [{

"category": "multitest",

"description": null,

"entries": [{

"category": "suite",

"description": null,

"entries": [{

"description": null,

"entries": [{

"category": null,

"description": "passing equality",

"first": 1,

"label": "==",

"line_no": 54,

"machine_time": "2018-02-05T15:16:40.951528+00:00",

"meta_type": "assertion",

"passed": True,

"second": 1,

"type": "Equal",

"utc_time": "2018-02-05T15:16:40.951516+00:00"}

],

"logs": [],

"name": "passing_testcase_one",

"status": "passed",

"status_override": null,

"tags": {},

"tags_index": {},

"timer": {

"run": {

"end": "2018-02-05T15:16:41.164086+00:00",

"start": "2018-02-05T15:16:40.951456+00:00"}},

"type": "TestCaseReport",

"uid": "9b4467e2-668c-4764-942b-061ea58da0f0"

},

...

],

"logs": [],

"name": "BetaSuite",

"status": "passed",

"status_override": null,

"tags": {},

"tags_index": {},

"timer": {},

"type": "TestGroupReport",

"uid": "eeb87e19-ffcb-4710-8eeb-6daff89c46d9"}],

"logs": [],

"name": "MyMultitest",

"status": "passed",

"status_override": null,

"tags": {},

"tags_index": {},

"timer": {},

"type": "TestGroupReport",

"uid": "bf44e942-c267-42b9-b379-5ec8c4c7878b"}

],

"meta": {},

"name": "Basic JSON Report Example",

"status": "passed",

"status_override": null,

"tags_index": {},

"timer": {

"run": {

"end": "2018-02-05T15:16:41.188904+00:00",

"start": "2018-02-05T15:16:40.937402+00:00"

}

},

uid": "5d541277-e0c4-43c6-941b-dea2c7d3259c"

}

A JSON report can be generated via --json argument:

$ ./test_plan.py --json /path/to/json

Same result can be achieved by programmatic declaration as well:

@test_plan(name='Sample Plan', json_path='/path/to/json')

def main(plan):

# Testplan implicitly creates JSON exporter if we just pass `json_path`

...

A more explicit usage is to initialize a JSON exporter directly:

from testplan.exporters.testing import JSONExporter

@test_plan(

name='Sample Plan',

exporters=[

JSONExporter(json_path='/path/to/json')

]

)

def main(plan):

...

Examples for JSON report generation can be seen here.

Browser¶

Testplan can store the report locally as a JSON file and then starts a web server to load and parse it, thus it is convenient for user to open the test report in the browser such as Chrome or Firefox, like below:

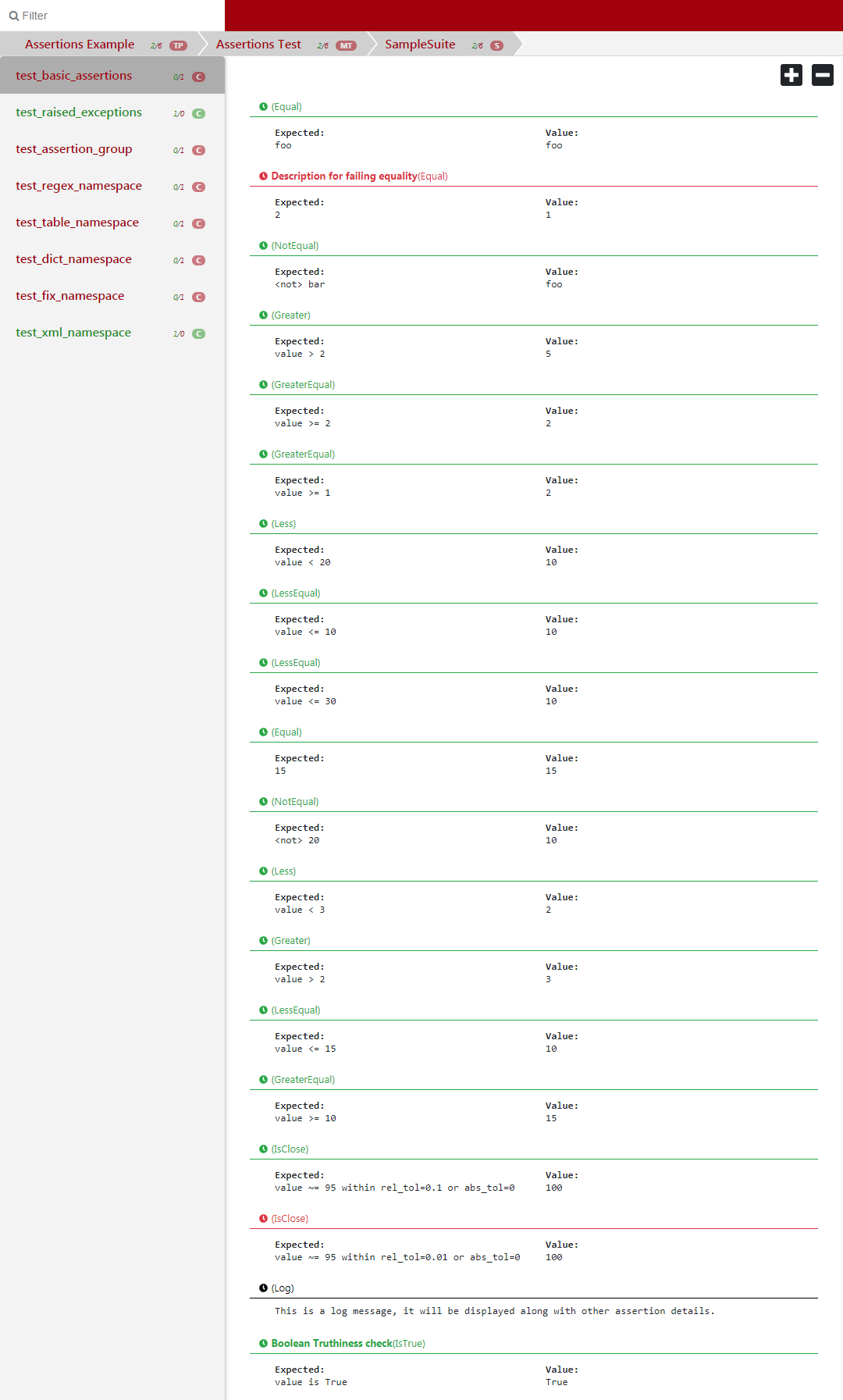

Basic assertions Browser UI representation:

Table assertions Browser UI representation:

Fix/Dict match assertions Browser UI represenation:

Command line option --ui can be used to start a local web server after Testplan runs.

A port number can be specified after the argument, if ignored a random port will be used.

Testplan will print a URL to console which can be opened in a browser to view the report.

$ ./test_plan.py --ui 12345

Or programmatically declare it:

@test_plan(name='SamplePlan', ui_port=12345)

def main(plan):

# Testplan implicitly starts a web browser and use can browse the test report

...

This feature requires the install-testplan-ui script to have been run (see

Install Testplan in the Getting Started section). If

this script hasn’t been run the web server will start but the report won’t load

in the browser.

An alternative is to initialize a web server exporter directly. But it is recommended to place this exporter last in the list after all other exporters. Because this exporter will cause Testplan to block, while other exporters might also have post exporter steps to be completed.

from testplan.exporters.testing import WebServerExporter

@test_plan(

name='Sample Plan',

exporters=[

WebServerExporter(ui_port=12345)

]

)

def main(plan):

...

Customization¶

Custom Exporter¶

You can define your exporters by inheriting from the base exporter class and use

them by passing them to @test_plan decorator via exporters list.

Custom export functionality should be implemented within export method.

Each exporter in the exporters list will get a fresh copy of the original

source (e.g. report).

from testplan.common.exporters import ExportContext

from testplan.exporters.testing import Exporter

class CustomExporter(Exporter):

def export(

self,

source: TestReport,

export_context: ExportContext,

) -> Optional[Dict]:

# Custom logic goes here

...

@test_plan(name='SamplePlan', exporters=[CustomExporter(...)])

def main(plan):

...

Examples for custom exporter implementation can be seen here.

Output Styles¶

Certain output processors (e.g. stdout, PDF) make use of the

generic style objects for

formatting.

A style object can be initialized with 2 arguments, corresponding display levels for

passing and failing tests. These levels should be one of

StyleEnum values

(e.g. StyleEnum.CASE) or their lowercase string representations (e.g. 'case')

from testplan.report.testing.styles import Style, StyleEnum

# These style declarations are equivalent.

style_1_a = Style(passing=StyleEnum.TEST, failing=StyleEnum.CASE)

style_1_b = Style(passing='test', failing='case')

Style levels are incremental, going from the least verbose to the most verbose:

RESULT = 0 # Plan level output, the least verbose

TEST = 1

SUITE = 2

CASE = 3

ASSERTION = 4

ASSERTION_DETAIL = 5 # Assertion detail level output, the most verbose

This means when we have a declaration like Style(passing=StyleEnum.TESTCASE, failing=StyleEnum.TESTCASE)

the output will include information starting from Plan level down to testcase method level,

but it will not include any assertion information.

Here’s a simple schema that highlights minimum required styling level for viewing related test information:

Testplan -> StyleEnum.RESULT

|

+---- MultiTest 1 -> StyleEnum.TEST

| |

| +---- Suite 1 -> StyleEnum.SUITE

| | |

| | +--- testcase_method_1 -> StyleEnum.CASE

| | | |

| | | +---- assertion statement -> StyleEnum.ASSERTION

| | | +---- assertion statement

| | | ( ... assertion details ...) -> StyleEnum.ASSERTION_DETAIL

| | |

| | +---- testcase_method_2

| | +---- testcase_method_3

| |

| +---- Suite 2

| ...

+---- MultiTest 2

...

Here is a sample usage of styling objects:

from testplan.report.testing.styles import Style, StyleEnum

@test_plan(

name='SamplePlan',

# On console output configuration

# Display down to testcase level for passing tests

# Display all details for failing tests

stdout_style=Style(

passing=StyleEnum.CASE,

failing=StyleEnum.ASSERTION_DETAIL

),

pdf_path='my-report.pdf',

# PDF report configuration

# Display down to basic assertion level for passing tests

# Display all details for failing tests

pdf_style=Style(

passing=StyleEnum.ASSERTION,

failing=StyleEnum.ASSERTION_DETAIL

)

)

def main(plan):

...